If the words npm and Yarn mean nothing to you, then I envy you. They are Node.js package managers. JavaScript engineers like myself use them to manage our packages.

npm gets the job done, but it's slow. This is where Yarn is your knight in shining armour. Yarn is a drop-in replacement, and it's faster.

For many projects, it was a simple choice to switch over when Yarn was released in 2016.

Yarn Plug’n’Play

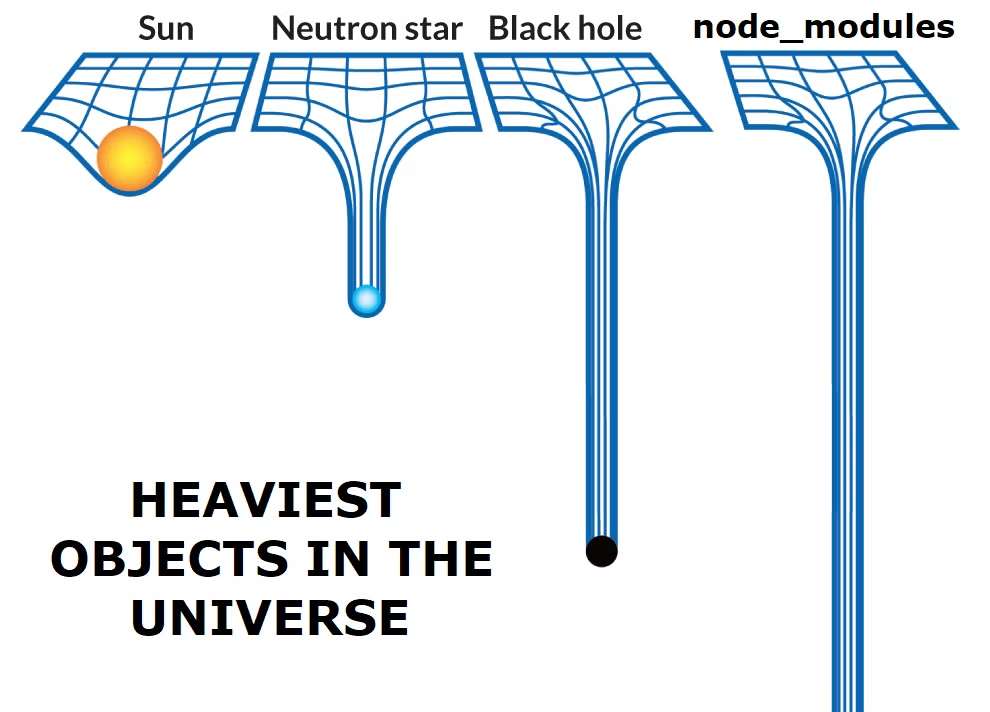

Typically, npm installs all dependencies into a folder called node_modules. You may have heard rumours that these folders are gigantic. That's not an exaggeration, as they routinely grow to well over 1GB.

There are many problems with node_modules. For example, each installed package gets its own node_modules, leading to package duplication and thousands upon thousands of folders. That's also why the folder gets so darn big.

Yarn PnP solves this by getting rid of node_modules entirely. Woohoo 🎉

But don't worry, it's replaced with something better. When you run yarn install, it will install all packages once into a global store.

Then when you run Node, Yarn injects a custom loader that tells Node.js where to find the packages you import and require.

Strictness for the good of the ecosystem

Yarn PnP also protects against ghost dependencies. These are dependencies you reference but haven't listed as dependencies.

Wait, that shouldn't work at all! True, but imagine this: your application needs dependency A, and dependency A needs dependency B.

You can now import both dependency A and dependency B because they are both installed.

Later you decide to remove dependency A, and your application breaks. I have faith that you will find the issue quickly, but it's still annoying and could have been avoided.

Because of the way PnP is designed, it can catch ghost dependencies and prevent them.

Yarn 2.0 uses PnP by default

When Yarn Modern (also known as Yarn Berry or Yarn 2.0) was released in 2020, it used the PnP install strategy by default, and to put it simply, the migration was difficult and often impossible.

It was no longer a drop-in replacement like version 1 had been, and lots of projects could not switch.

If a package didn't install properly, you had to rely on the package maintainers to fix it. That often never happened.

I am very sympathetic to the Yarn maintainers for pushing this change and trying to improve the JS ecosystem for good. But in the end, it prevented many from upgrading at all.

Because of this, many projects still use Yarn v1, and those that have upgraded have skipped v2 entirely.

Not long after launch, a plugin to re-enable node_modules support released, but alas it was too late.

Yarn v3 shipped with a node_modules linker enabled by default, making migration possible again.

Package Manager usage visualised

I analysed around 10,000 GitHub repositories to see how the issues around Yarn Plug'n'Play affected adoption.

HINT: You can click the package manager name to hide it.

The results are not too surprising, except Yarn v2 appears to be missing completely.

I expected very little adoption, but finding none at all was a bit suspicious.

For transparency, this is how I detected the yarn version:

- If

package.jsoncontains a yarn version, use that - If there is a

yarn.lock, but it's not valid YAML, it's yarn v1 - If there is a

yarn.lock, and it's valid YAML, it's yarn v2

Deno, where are you?

Deno is an alternative JS runtime that was meant to address some of the inherent flaws in Node.js.

One of the key differences is how it handles dependencies (there seems to be a bit of a pattern here).

With Deno, you simply import a package from a URL, and Deno fetches the package at runtime.

import { Client } from "https://deno.land/x/[email protected]/mod.ts";That's cool, but at launch there was no npm package support, making a migration from Node.js impossible.

As Deno has matured, it now supports importing npm packages as well as JSR packages, and has a full Node.js combability layer.

It seems the original intent of Deno wasn't to replace Node.js, but just to be a modern alternative for new projects.

The problem with the JS ecosystem is that it is practically impossible to build anything without dependencies. So if you wanted to use Deno, you would have to rely on the standard library and packages from deno.land (the Deno package regristry).

Because of this, Deno didn't see much adoption.

The tool must be drop-in

The lesson I've learnt from the Yarn v2 launch is that when a tool is designed to replace another tool, it must be drop-in.

Or if not drop-in, it must be very easy to migrate.

Consider vitest, which is intended as a replacement for jest (a JS test runner). It's not drop-in, but it's very close.

A masterclass

Bun is a modern JS runtime that is much faster than both Node.js and Deno. On top of being a runtime it's also a: test runner, package manager and bundler.

I have been following its progress for years, and it's always been clear that Bun had the potential to dethrone Node.js.

Why? Because it doesn't require migration. Most smaller projects can migrate to Bun right now. For larger projects, the transition is not always smooth, but bugs and incompatibilities are constantly being fixed.

For those that don't want to migrate fully, they can choose individual components:

- Node.js → Bun Runtime

- Jest → Bun Test Runner

- Vite/Babel → Bun Bundler

- npm → Bun Package Manager

Every part of Bun conforms to the status quo while being better and faster. The package manager uses package.json and node_modules. And the test runner uses the Jest test file format, just to name a few examples.

So hats off to the Bun team, and I can't wait to migrate this website to Bun.

Conclusion

That's it. If you want to build a new and exciting tool, make sure people can actually migrate it, and make it easy to do so.

And when you release a major version update, ensure there is a migration path, if you want anyone to upgrade.